Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Before you start building AI-powered applications using LangChain, the first step is to generate API keys for the core services you’ll be integrating: OpenAI, Groq, and LangSmith. These keys act as secure credentials that allow your app to interact with the respective platforms. Head over to the OpenAI platform and log in to generate your key. For Groq, visit their developer portal to create an account and retrieve your API key, enabling high-speed inference capabilities. Finally, create an account on LangSmith—LangChain’s observability and evaluation platform—to generate your LangSmith API key. With these keys securely stored in your environment variables, you’re ready to start building customized AI workflows that combine the power of LLMs with structured reasoning and monitoring.

Always store your API keys in a .env (environment) file to keep them secure and separate from your source code. This practice helps prevent accidental exposure of sensitive information, especially when pushing code to version control platforms like GitHub. Never share your .env file publicly or commit it to a repository

Use python-dotenv to load these into your app securely

# .env file

LANG_SMITH_API_KEY=lsv2_pt_f97xxxxxxxxxxxxxxxxxxxxxxxx

GROQ_API_KEY=gsk_vW0kaSf45RKyxxxxxxxxxxxxxxxxxxxxxxxxxxx

OPENAI_API_KEY=sk-proj-JtyxzxxxxxxxxxxxxxxxxxxxxxxxxxxxLangChain is a powerful open-source framework designed to help developers build applications powered by large language models (LLMs) like OpenAI’s GPT. It provides the tools to integrate LLMs with external data sources, APIs, memory, agents, and more—enabling the creation of context-aware, intelligent applications.

LangChain goes beyond basic LLM queries like “chat completion” or “text generation” by offering:

LangChain makes it easy to build applications powered by large language models (LLMs). In this blog, we’ll walk through a basic LangChain example using ChatOpenAI to answer any question.

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="o1-mini")

result = llm.invoke("What is Agentic AI")

print(result)

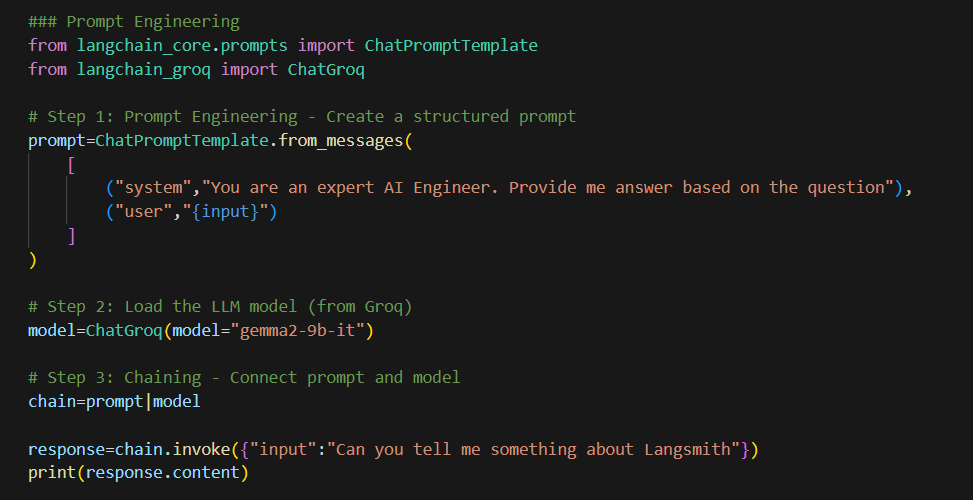

ChatOpenAI from langchain_openai, which allows us to connect to OpenAI’s chat models"o1-mini" — a lightweight and faster model.invoke() sends a prompt to the LLM.print(result) displays the output.Prompt Engineering is the process of designing structured inputs (prompts) to guide AI models to give accurate and relevant responses. In LangChain, we use ChatPromptTemplate to create prompts with specific roles like system and user. For example, we can instruct the AI to behave like an “expert AI Engineer” and respond to any user question accordingly. This makes the model’s output more targeted and professional.

Next, we bring in the concept of Chaining, which is a powerful feature in LangChain. Chaining allows us to connect a prompt directly to a language model using the | operator. This creates a smooth pipeline where the prompt is passed to the model automatically. It’s a clean and efficient way to build intelligent workflows, chatbots, or task-specific AI tools.

Here’s a simple code example that brings both ideas together:

With just a few lines of code, you’ve created a custom AI response system that follows your instructions and answers user questions intelligently. This approach is perfect for building AI-powered tools with clear, structured logic.

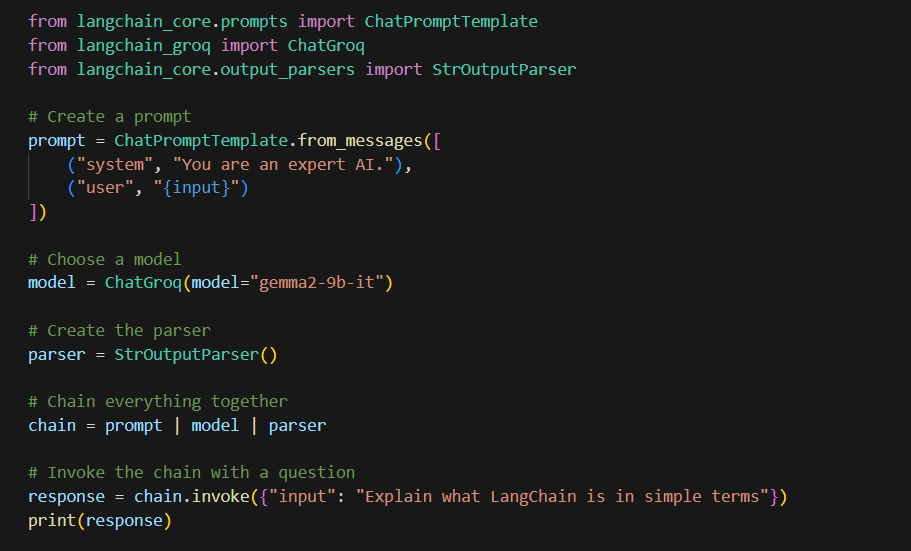

Now that we’ve covered the concepts of Prompt Engineering and Chaining, you’ve seen how we design structured prompts and connect them with AI models to get intelligent responses. The next step in the workflow is understanding how to parse the output — this is where Output Parsers come in. Output parsers help us extract and format the AI’s response in a clean and usable way, especially when we need to work with specific data formats like text, JSON, or structured fields.

Once we receive a response from a language model using prompt engineering and chaining, the next challenge is extracting clean, structured, and usable data from that output. This is where Output Parsers come into play in LangChain.

An Output Parser is a tool that helps convert the raw AI response (which is usually plain text) into a more usable format like structured text, JSON, numbers, or even specific Python objects. This is especially useful when you’re building applications that depend on clean data — like dashboards, chatbots, data pipelines, or AI agents.

LangChain provides several built-in output parsers, and you can also create your own custom ones.

When working with AI-generated responses, sometimes all you need is the plain text — no formatting, no structure, just the actual string content. That’s exactly what the StrOutputParser is for in LangChain.

StrOutputParser is the most basic and commonly used output parser in LangChain. It simply extracts the raw text (a string) from the AI’s response. This is useful when you don’t need to convert the output into JSON or any special format — just a direct, readable answer.

In short, StrOutputParser is perfect when your goal is to keep things simple and readable. It’s often used as the default parser when working with chat or text-generation models in LangChain.

There are several other output parsers available in LangChain, each designed for different use cases. Some commonly used ones include:

StrOutputParser – for plain text outputCommaSeparatedListOutputParser – to parse comma-separated values into a listPydanticOutputParser – for structured data using Pydantic modelsJsonOutputKeyParser – to extract specific keys from a JSON responseYou can explore all available output parsers and their usage in detail here:

👉 LangChain Output Parsers Documentation

In conclusion, building intelligent AI workflows using LangChain becomes more powerful and organized when we combine key concepts like Prompt Engineering, Chaining, and Output Parsers. Prompts help us guide the model’s behavior, chaining allows us to connect components smoothly, and output parsers ensure the AI’s responses are clean, structured, and usable in real applications. By understanding and applying these tools, you can develop more reliable and production-ready AI solutions with minimal effort.