Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

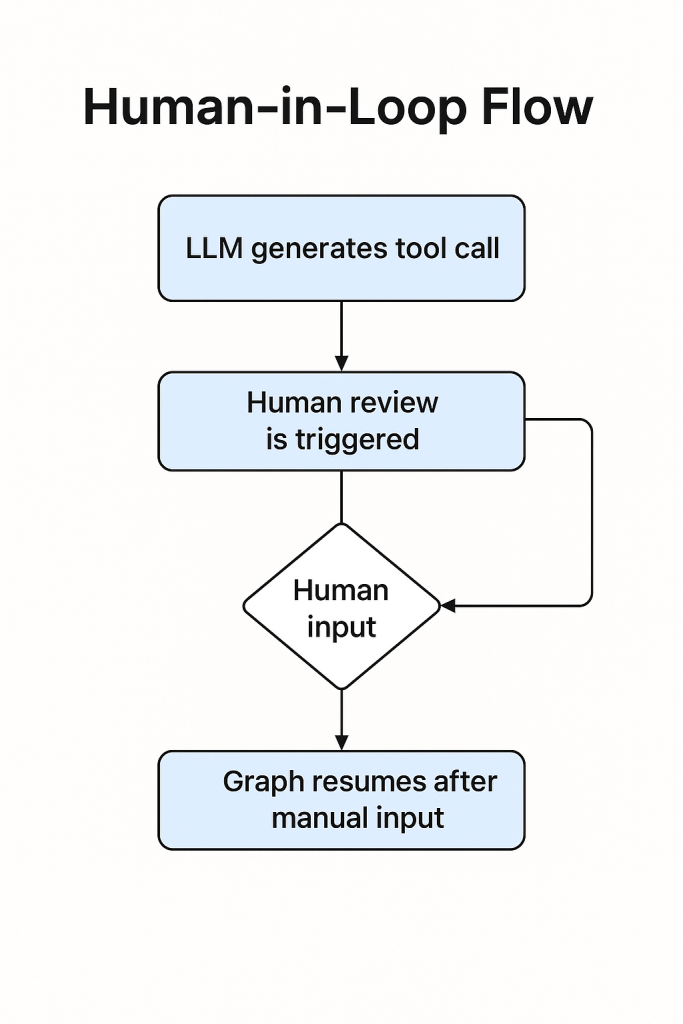

In today’s AI-powered world, automation without accountability can be risky. Enter LangGraph with Human-in-the-Loop (HITL) — a powerful approach for building safe, controllable, and modular agent workflows using LangChain’s LangGraph framework. Whether you’re building an AI assistant for legal review, customer support, or sensitive decision-making, HITL ensures humans retain control over critical checkpoints.

In this blog, we’ll walk through:

Imagine a law firm using an AI assistant to draft contracts. You want the AI to handle:

…but before sending the final draft, a human lawyer must approve it. That’s the perfect use case for LangGraph with HITL.

from dotenv import load_dotenv load_dotenv() from langchain_groq import ChatGroq llm = ChatGroq(model_name="deepseek-r1-distill-llama-70b")

from langchain_core.tools import tool

from langchain_community.tools.tavily_search import TavilySearchResults

@tool

def add(x: int, y: int) -> int:

return x + y

@tool

def search(query: str):

tavily = TavilySearchResults()

result = tavily.invoke(query)

return f"Result for {query}:\n{result}"

tools = [add, search]

llm_with_tools = llm.bind_tools(tools)

You can test tool binding with:

result = llm_with_tools.invoke("What is the capital of India?")

LangGraph requires a state model. Here’s our basic structure:

from typing import TypedDict, Sequence, Annotated

import operator

from langchain_core.messages import BaseMessage

class AgentState(TypedDict):

messages: Annotated[Sequence[BaseMessage], operator.add]

This state tracks all messages exchanged with the agent.

This is where LangGraph truly shines by allowing us to pause execution and involve a human reviewer mid-agent run.

from langgraph.types import interrupt

from langchain_core.messages import HumanMessage

def human_node(state: AgentState):

# Pause the graph and request manual review

value = interrupt({

"text_to_revise": state["messages"][-1].content

})

return {

"messages": [HumanMessage(content=value["text_to_revise"])]

}

interrupt() doing?The interrupt() method in LangGraph is a controlled pause point. When this node executes:

{

"__interrupt__": {

"text_to_revise": "GDP of India"

}

}

This output can now be shown in a UI or CLI to ask the user: “Do you want to approve or revise this?”

interruptresume

from langgraph.graph import StateGraph, END

from langgraph.checkpoint.memory import MemorySaver

graph_builder = StateGraph(AgentState)

graph_builder.add_node("human", human_node)

graph_builder.set_entry_point("human")

graph_builder.set_finish_point("human")

graph = graph_builder.compile(checkpointer=MemorySaver())

Run the graph and observe how it halts at the human review node:

config = {"configurable": {"thread_id": "session-001"}}

result = graph.invoke({"messages": [HumanMessage(content="Get me GDP of India")]}, config=config)

print(result["__interrupt__"])

Command(resume=)?Once the human has revised or approved the content, you can resume the graph using:

from langgraph.types import Command

graph.invoke(

Command(resume={"text_to_revise": "GDP of India"}),

config=config

)

Command(resume={...}) tells LangGraph to continue from the pause point.interrupt() call.User prompt ➔ LLM generates response ➔

Human Node ➔ interrupt() ➔ waits for input ➔

resume() with approved text ➔ execution continues

| Component | Role |

|---|---|

ChatGroq | Base LLM for drafting |

AgentState | Shared state across nodes |

human_node | Manual decision point using interrupt |

Command(resume) | Resumes graph execution after approval |

LangGraph | Manages node execution + state tracking |

LangGraph makes it easy to combine powerful AI agents with human intelligence — ideal for building real-world production workflows.

With built-in support for pause/resume logic, interrupt() and resume() form the foundation for trustworthy AI automation, empowering teams to confidently deploy LLMs where oversight matters.