Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

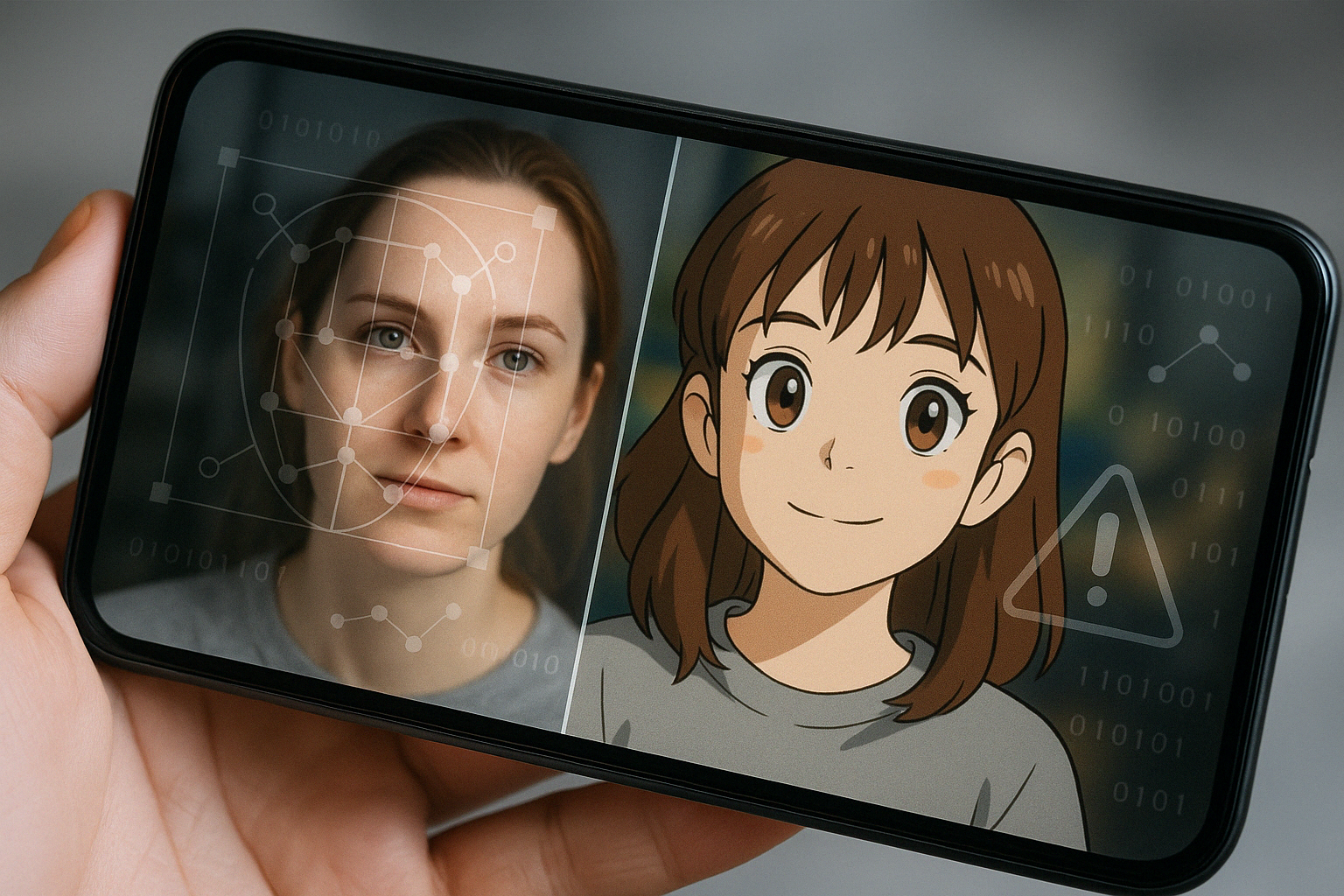

Scroll through your social media, and you’ll likely see them: charming, Ghibli-esque portraits of friends, colleagues, and even pets. The latest viral trend uses AI image generators, often integrated with platforms like ChatGPT, to turn everyday photos into whimsical anime art. It’s captivating, fun, and instantly shareable. But as we happily upload our selfies for this digital makeover, a critical question, explored in a recent Firstpost report, looms: How safe is your data? And more importantly, how exactly can a simple photo upload put your privacy at risk? It turns out, there’s more to that image than meets the eye.

The appeal is clear. Tools like OpenAI’s image generator allow users to effortlessly blend their own photos with distinct artistic styles. The Ghibli filter craze saw everything from personal portraits to famous memes reimagined, flooding platforms and even causing temporary service outages due to sheer demand. OpenAI’s CEO Sam Altman even pleaded for users to “please chill,” highlighting the massive engagement these tools generate. They feel like harmless digital toys.

can yall please chill on generating images this is insane our team needs sleep.

When you upload a selfie for that Ghibli-style AI makeover, it’s not just fun — you’re handing over valuable data. According to OpenAI’s privacy policy, personal inputs (including images) may be used to train AI models unless you opt out.

Here’s what your photo reveals:

That free filter? You’re paying with data — your face, your habits, your life.

The Dark Side: How Image Data Can Be Misused

Once your photo is uploaded, it can fuel more than just AI art. Here’s how it could be exploited:

That innocent upload? It may have long-term consequences you didn’t sign up for.

The Ghibli trend is just one piece of a larger pattern where personal data fuels AI systems:

Your biology, habits, and health — all are becoming data points in a growing AI-driven ecosystem.

Sharing your data – whether a selfie, health metrics, or DNA – with these platforms carries significant risks:

AI is exciting — but every interaction may come at the cost of your personal data. Here’s how to stay safe:

Even ChatGPT says it: enjoy the tech, but protect your data.

From playful Ghibli filters to AI-powered health tools, artificial intelligence is weaving itself into our daily lives — powered by one thing: our data.

A simple image upload can reveal biometric and contextual details that, once collected, may be used for everything from personalization to deepfakes or surveillance.

This isn’t a call for fear — but for informed caution. Every upload is a choice. By staying aware of what we share and who we share it with, we can embrace the benefits of AI while protecting our digital identity.