Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

In 2025, the rise of LLM-powered apps is transforming industries—from AI assistants to smart automation tools. If you’re building an application using GPT-4, Claude, or open-source LLMs, you’ll need a robust framework to handle logic, memory, state, and tool usage. Two of the most discussed frameworks today are LangChain and LangGraph.

This guide compares LangGraph vs LangChain with real-world use cases, pros and cons, and a final recommendation for developers, startups, and AI builders.

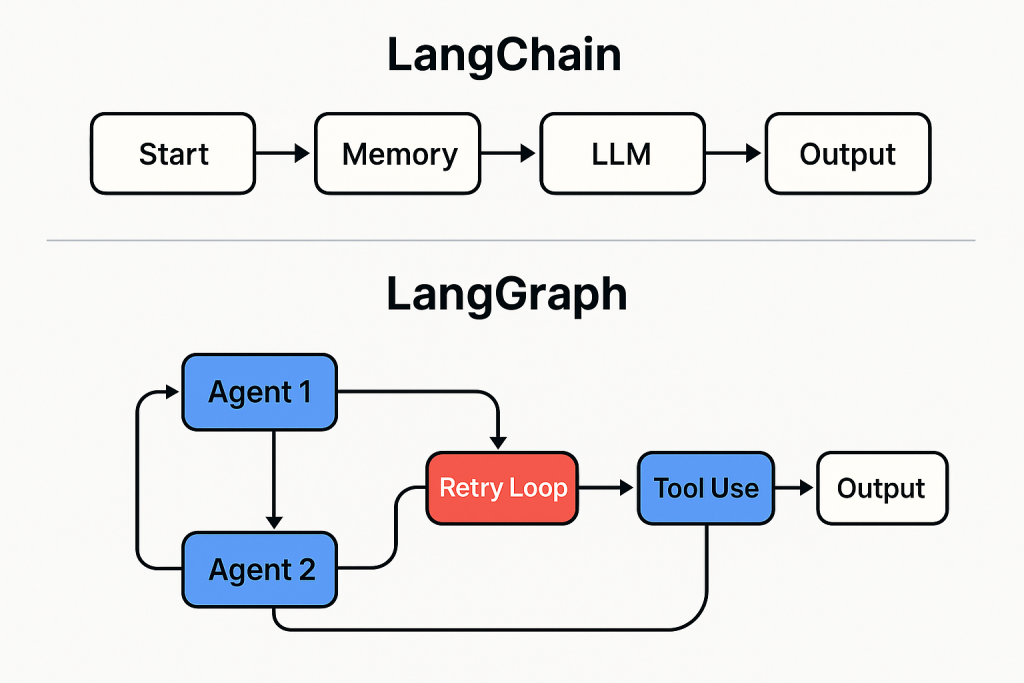

LangChain is a Python-based framework designed to build applications powered by language models. It abstracts common components like LLM wrappers, memory, chains, and tools to help you get started quickly.

LangGraph is a newer, graph-based orchestration framework created by the LangChain team. It’s designed for multi-agent, stateful, and cyclical applications using LLMs.

Instead of relying on chains or sequences, LangGraph allows you to build a state machine or directed graph, where each node represents a step (agent, tool, memory access), and edges define the transitions.

| Feature | LangChain | LangGraph |

|---|---|---|

| Abstraction Level | High-level | Low-to-mid level |

| Loop / Cyclical Support | Limited | Built-in |

| Multi-agent Support | Basic | Advanced |

| Debuggability | Moderate | Excellent with state tracing |

| Use Case Fit | Chatbots, RAG | Agents, planners, workflows |

| Memory Handling | Basic | Flexible + contextual |

| Community Size | Large | Growing |

| Performance Optimization | Less control | More control |

Use Case: You want to build a travel planner agent that:

In LangChain, building this would require juggling chains, callbacks, and memory tracking across steps. Debugging retries would be tough.

With LangGraph, you model this as a graph:

Result: Better clarity, control, and performance.

| Add-On | Works Best With | Use For |

|---|---|---|

| Streamlit | LangChain | Frontend UI for quick demos |

| NetworkX Graph Viewer | LangGraph | Visualizing state transitions |

| Pinecone/ChromaDB | Both | Vector store for semantic search |

| OpenAI Tools API | LangGraph | Agent + Tool usage at scale |

LangChain is still a fantastic framework for getting started with LLMs. But as your app grows in complexity—with multiple agents, retries, tool integrations, and branching logic—LangGraph is the future. It brings structure, transparency, and state control to your LLM-powered applications.

💡 Pro Tip: Start with LangChain. Graduate to LangGraph when your AI app gets smarter.